The Future of Innovation Powered by Big Compute

Joris:

All right. Well, thanks everyone for joining us. Welcome to Big Compute. I’m really excited to welcome you today in the heart here is Silicon Valley, the engine of innovation. We’ve got an incredible lineup of speakers as Jolie just shared, and I encourage you to engage, explore, and work together with the thought leaders and the like-minded individuals and innovators that have gathered here today in our community to push the boundaries of what computation and innovation can help us drive in the future.

So to kick it off today, I’d like to step back and think about what has computing and innovation and technology delivered over the last few decades. So where are we today and where are we going? So to think about that, one of the ways we might want to look at technological progress is take a time machine back a few decades ago and think about what would have been the innovations and technology and computation that we would have expected by now. So popular movies like Back to the Future would have predicted technologies like real time video conferencing and ubiquitous communication. Not only have we delivered that, we’ve gotten much more. Social media, the gig economy, services like Uber, Twitter, Airbnb have truly connected us and changed our lives.

Now, looking at these services, they have been incredible and really pushed our society forward. There’s also been areas where we might not have really gotten what we had wished for, like the home fusion reactor, sustainable energy for every home, flying cars, starting to make progress, but we’re not there yet. So what really is the difference between the innovations where we’ve succeeded or even beat expectations and the areas where we’re still struggling to push the boundaries forward?

Well, I’d like to think about this in two different major categories. The first is digital innovation. So this is the innovation of bits and the conductivity driven software innovations we’ve seen here have really transformed us forward. The sort of vivid picture that you see here is that of the Vatican City during the inauguration of the Pope. And you can see in 2005 there was a single person in the back row with a feature phone trying to take a picture. That was probably a terrible picture. But less than a decade later, every single person in that crowd was able to not only take a picture for themselves in high definition, but they’re instantly able to share that with anybody they wanted around the world. That’s truly incredible. Something that we wouldn’t have even been able to predict.

During the same timeframe if we take a look at what have we accomplished in the world of applied sciences, this is physical innovation, the world of atoms. The most popular vehicle, the Toyota Camry didn’t change, still the Toyota Camry. And, if we look at the actual vehicle, we’ve shaved off a few miles per gallon here or there. But I think we can all agree that we haven’t really delivered on the future that would have been possible. So why is that?

So you’ve probably heard from thought leaders in technology like Buzz Aldrin who’s made claims that we’re just using technology to build widgets to make a quick buck. And Peter Thiel who has made a very clear point around technology stagnation, that we haven’t been able to get the future that has been promised. And to a certain extent they can certainly be right. Think about supersonic flight, a technology that already existed, but in the last decade nobody’s flown commercially in supersonic. The life expectancy challenge. So if we actually plot the progress over the last 10 years, not only have we slowed down, if you actually look at the US the last few years, life expectancy has been going down. This is a real challenge we need overcome. And finally, sustainable energy, climate change. Even with the impending doom of climate change, we have yet to deliver the innovation in the applied sciences world that it takes to fix this challenge.

So these challenges are fundamental to the future. So I believe that this is the true technology challenge of our time and our generation. Applied science and engineering innovation drives our society forward and these are the key innovations we need to be able to progress into the future that we all want. Now as the old adage goes, nothing worthwhile is ever easy. Applied sciences innovation is really hard and there’s a reason we haven’t seen as much progress there. So a few of the key pillars that are barriers.

The first is regulation and regulation can certainly be a good thing. I think we can all agree that crappy video conferencing is really not the same as a crappy airplane. The second challenge is the monetization cycle. So with realtime connected services, we’re able to monetize and drive businesses forward quickly and easily and scale them versus in the world of applied sciences innovation we actually have to do the physical R and D and production of things like rockets, drugs, and discovering with the actual process for medical devices. These take a long time and so the payback periods might be a lot longer. The pay off could also be a lot bigger. But this is a key challenge for financing these types of innovations.

And finally to technology cycle. So this is an area I’d like to explore a little deeper with you today because I think we can do a great job on the technology. If we explore why is the applied sciences technology cycle so challenging, I think it comes down to three key areas. The first is human intellect; the second, the big data explosion; and the third, big compute. So let’s explore these. First human intellect. We are actually minting more engineers and scientists every day than we’ve ever done before. We have millions and millions of people with degrees and PhDs and expertise that can help us push society forward. However, we’re taking the experts like PhDs in aerodynamics and PhDs in material science and turning them into data scientists to count ads at Facebook. So while we have the people, and that’s certainly not the challenge, I think we can actually repurpose them to drive innovation forward faster. So human intellect is not the challenge.

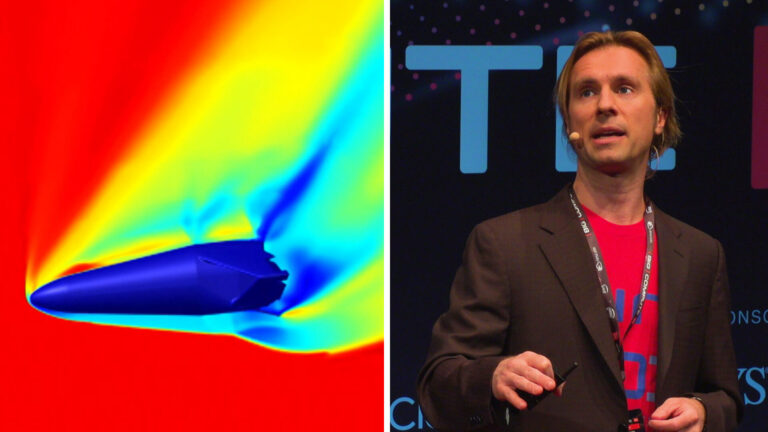

The next is data. As we all know, data has been in an incredible explosion over the last decade. In the last 18 months we’ve seen more data be created than ever in the history of humanity. So we’ve got plenty of data. But what the real challenge becomes, if it’s not data and it’s not human intellect, I would say it’s big compute. And for me, this is a very personal story. I was one of the engineers 15 years ago working on the 787 Dreamliner. The 787 Dreamliner was made to be a revolutionary aircraft, the first fully composite carbon fiber airplane in the world. We had an enormous engineering challenge. We had to build the digital twin through all the disciplines of applied sciences, like aerodynamics, structural design, and many others. And it had over 20 million different variables to design on the actual wing. We had to iterate through these simulations to get to the optimal design to be able to make this airplane fly.

Now the first time we put all these different pieces of software together and we actually used machine learning and optimization techniques to get to that best design, a single iteration would have taken three years. So once we realized that, we knew that we wouldn’t be able to solve the problem that way. So then this became a computer science problem. We had to build a distributed systems architecture and be able to capture the actual data centers from all the different business units around the world to be able to run the computational problems just to optimize the wing design. So several years later we were finally able to deliver some incredible results. We saved several hundred million dollars in just weight savings off the airplane. We took a design cycle that was taking three months all the way down to less than 24 hours. Why 24 hours? Because we could only use that compute over the weekend because during the week other people were using it.

Now it really shouldn’t have been that hard. Engineers and scientists shouldn’t be restricted and constrained in actual innovation that they can deliver because of computational barriers. So this is why 10 years ago we founded the company Rescale with the mission to enable and empower the world’s engineers and scientists to drive these applied science innovations and deliver the future that we really want.

So many of you might say, “Well, last 10 years we’ve seen cloud computing take off, right? Don’t we already have access to infinite computing?” Well, the problem is actually a little bit more deeper than that. So we take a look at hyper-scale compute, which is a category of computation using commodity servers. We can solve problems very easily that have a certain type of typology. So loosely coupled problems where you can take a single problem and chop it up into pieces and solve them on the individual processors is something that the services for hyperscale computing have all been built on. Incredibly powerful. This kind of capability allows you to build those connectivity driven innovations services like Uber, Twitter, Airbnb. They all leveraged simple services that developers can build upon like fetching files, database queries, graphics rendering. But these have all been boiled down to simple computations.

On the other hand, we have big compute. So this is a class of problems where we can’t just chop it up into pieces and solve them independent of each other. We actually have to make the calculations on these individual processors and have them communicate with each other as we start solving these algorithms. So this class of problems is core to the applied sciences domain. From fluid dynamics in aerodynamic calculations to finite element analysis in structural crash simulations to many other disciplines from acoustics to molecular dynamics, they all are dependent on physics and physics simply scales differently. We cannot rewrite the nature and the laws of physics. So this is why big compute is fundamentally different and critical for us to push forward.

Now the challenge of big compute comes down to three major areas. The first is specialized hardware. Because of the nature of these problems, we have to be able to leverage the specialized nature of the hardware advances that we have to be able to solve these tightly coupled problems. The second is need to think differently about how we actually compute in the stack, how we align the algorithm to the infrastructure. And finally the third, perhaps the most important, we have to make this usable and democratize this capability to the world’s brightest minds and innovators.

So taking a look deep more deeply at the first. As we all know, Moore’s law has been slowing down. So for generalized compute capability where we’ve been able to get the gains that we needed for all these technological innovations, it’s been slowing down. Instead of two times the gain every two years, we’ve seen a decreased amount of progress in the general processor. However, there’s a solution to this problem. It’s about specialized architectures. So we’ve seen incredibly innovative companies come up with unique architectures that can help address this gap. So by moving to more specialized architectures, we can solve a specific set of problems more efficiently. So these include GPUs, programmable chips like FPGAs, and even all the way to TensorFlow processing unit. An example from Google, building a custom chip just for a single algorithm. And machine learning revolution has really taken off. I think we all know about that, but the real reason that’s taken off is actually through the advancement of the actual compute capability. Without the specialized processors, we wouldn’t be able to solve the machine learning problems that are advancing some of the critical technology advancements today.

So to the second category, we need to rethink how we actually build infrastructure and how we utilize that at the software layer. So historically we’ve always built the data centers and the infrastructure and then simplified and built services and abstraction layers on top to be able to make these more usable for people like software developers in this connectivity driven innovation world. These digital innovations are super powerful and they are so because they’re able to abstract up this additional layer. However, these same technologies aren’t possible to be able to just be applied to the specialized computing landscape, we actually need to think workload down or software down, algorithm down. We first need to think about the scientist and engineer what is the actual problem we’re solving and then behind that we can actually build the technology and the capability in the stack to be able to solve that problem as efficiently as possible. And just like you wouldn’t go run a triathlon in your loafers that you walk out the door with, we need to rethink in the actual stack and how we approach the solving of compute problems in general.

Now the third component to be able to solve these problems really comes down to how we make this usable and accessible to the stakeholders that are actually going to be driving the insights and the computations that we need. So on the one hand, the digital innovation, we already have this stack. We have the commodity hardware in the hyperscale clouds, we have the abstraction layer, we have containers, we have many different services and orchestration layers to be able to in the infrastructure as a service in the cloud providers build all these services for developers to be able to bring these applications to bear. On the engineering and science side, we need exactly the same thing. So in order to bring these applied science innovations forward, we need to rethink that stack and we need to deliver that in a way to the engineers and scientists in an easy way because the engineers and scientists shouldn’t have to have another PhD in computer science just to be able to solve this problem.

So if you look at this ecosystem more broadly, we’ve seen an incredible amount of digital innovation and you can see the companies here that are successful and we’ve had an incredible amount of progress there from both the cloud providers and from many other stakeholders in that ecosystem. But over the next two days, I encourage you to really think about applied science innovation and let’s focus on solving the entire stack for the applied science innovation problem. This is the big compute problem, and this is the entire ecosystem that we’re building and the community that needs to thrive to be able to push the innovation forward. This requires a software defined approach to solving these compute problems and at Rescale. We’re here to help you do that.

If we’re able to accomplish that, we’re able to solve the fundamental problems of our future. We’ll be able to deliver not only specialized chips through trawling design automation innovations, but quantum computing and the fundamental future of how we actually do computing. In the areas of aerospace, we’ll be able to deliver the supersonic jet that we always wanted. We’ll be able to travel in space. We’ll be able to solve some of the most critical challenges in aerospace that allow us to build transportation that’s much more efficient. We’ll be able to solve the sustainability energy crisis that we have on our hands. These are all applied science innovations that are required for us to push the boundaries here. And finally, drug discovery and building personalized medicine is a critical area where we have not yet used computation in the way to drive this area forward to be able to deliver the healthcare that we all want.

So finally, with this technology stack, we can have these breakthroughs, but we really need one more thing and that’s courage. We need the courage of the legacy IT technology stack innovators to be able to rethink how they actually build and deliver this stack in the enterprise. We need the courage of the engineers and scientists who are going to drive these innovations to not only push the boundaries forward, but found the companies that are actually going to transform our future. We need your courage and your thinking over the next few days to build this community and push this ecosystem forward to be able to solve the most critical problems of our generation. So I hope you’ll join us today and tomorrow, and let’s together build the future that we really want and we deserve. Thank you.