HPC in the Cloud: Innovation Without Infrastructure Constraints

Barry:

Thank you so much. That was a great talk since I just got off a 10 hour flight from London last night to get here. I certainly would love to have made that a five hour flight, but we live in the world we live in. So thank you very much.

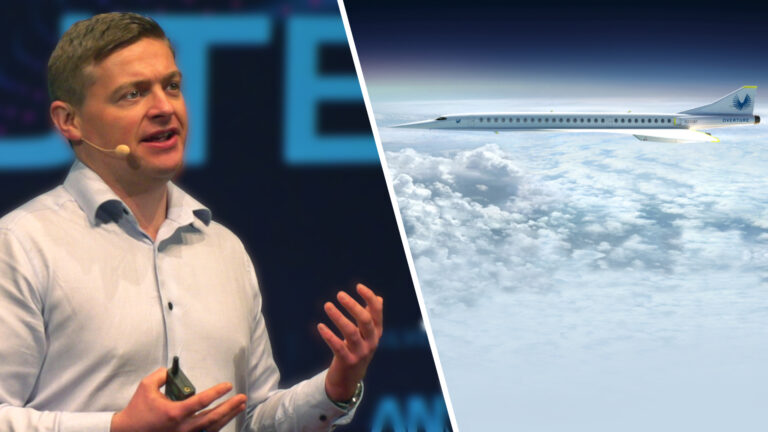

I just want to spend a little bit of time talking about high performance computing and give you a broad perspective insights into what I see as the innovation that cloud brings to high performance computing and its ability to break some of the constraints that we’re used to. And you heard from Boom and that’s a great example because that’s an engineering firm that is agile, that’s able to do the design work, and it’s advancing on work that was done by governments 50 years ago where budgets were massive, where the resources were massive. And these agile engineering firms are really going to change the landscape of the environment we live in. And they’re going to change the way that we do high performance computing and modeling.

So let me start. Aircraft design is just one of those areas. It’s an area that traditionally it was one of the first places that high performance computing was having impact on manufacturing and design, but today high performance computing is everywhere and companies are innovating in every design phase, every space from how they design their crafts in their coffee makers so they don’t break. They’re not as fragile. They can handle the hot and cold temperature fluctuations. They’re springing up one of the massive areas of high performance computing today is in the area of autonomous vehicles simulation and modeling. And that’s a workflow that is extremely complex where you’re combining traditional simulations with training methodologies, especially distributed training methodologies. And that’s really an interesting workflow. Many of those companies have sprung up in a cloud native environment. They’ve never owned data centers.

The fuel that you use in your jet aircraft or your cars, the batteries that you design, the weather that comes through on your cell phones every day is coming from high performance computing simulation and modeling and even your retirement portfolios. The simulations that are done to calculate the risks on the financial portfolios by investment banks is a high performance computing problem. So I’m going to touch on a few of those today and why they break the old constraints that we’ve lived under with the on premise solutions for the last 50 years.

One question that I think every engineer would love to ask is what could I design if I had unlimited resources? If the resources sitting in my data center closet, if the resources sitting in my small company data center or the resources sitting in a massive data center owned by the multinational corporation that I work at, what if those constraints were no longer there and I wasn’t having to worry about am I going to be able to do this simulation? Does it fit in the technology that we bought five years ago or three years ago? That’s a very liberating question. And so at AWS and in the cloud you have access to immense resources, and we’ve begun to talk to a class of customers that are asking us how can I rethink the simulation and modeling workflows and really begin to break those bounds of constraints.

One of the first examples we had was with Western Digital and they had a workflow where they were doing design for their disc drives where they’re doing electronic modeling of their disc drives. And this typically was a simulation they did on premise, took 20 to 30 days, and we reimagined the problem with them. And we really looked at trying to distribute those jobs out across all of the resources and capability that the cloud provided. And they were able to take that simulation and modeling problem and compress it down to eight hours. And this is not an unusual situation. In fact, the same types of workflows that Western Digital was looking at apply to, as I said, financial services, where every night when the market closes, they need to do that risk calculation and get it done by the next morning. Usually that’s a very predictable calculation except when high risk events occur. So let’s say that some major country changes its the interest rates dramatically or some type of inflation hits and suddenly the portfolio risks that they’re calculating are much more complex, and they need access to far more resources than they’ve been planning for.

So those types of questions, what could I do if I had access to a million cores is not a question that you have to be at a major multinational to ask anymore. It could be an engineer who only wants access to those million virtual CPUs for 10 minutes, for 15 minutes. And that’s not an immense budget issue.

We had an AWS customer this last year, Decartes Labs, who, who has, who does work on image processing from satellites, and the CEO of the company. It was coming up to super computing and he said, “I wonder if I can just spin up 40,000 cores and do a top 500 run?” And he literally pulled out his credit card. AWS was not aware of this. He literally pulled out his credit card. He plopped it down, he made a purchase, he ran a simulation. Now HP Linpack is a high performance computing application, but it is indicative of this ability of an engineer to quickly get access to resources.

One of the things that this does require of the engineer and of the company or the even the management teams at these companies is to begin to rethink how they approach the problem. Now at Amazon, we have a philosophy of interacting with customers that’s very, has a very low kind of PowerPoint slide presentation content. We actually walk into customer meetings without roadmaps, without canned presentations because we want to start from the customer experience and work backwards. And if you’re going to start rethinking how applications are done and rethink the workflows that you have in high performance computing, that’s a really powerful method. And we do this, whether it’s a two hour meeting with a customer or whether it’s a deep dive, what we call a re-imagining of your workflow, where we’ll spend a couple of days with the customer. And we’ll literally deep dive deep into every aspect of the types of calculations they’re doing and then say, “Well, if you weren’t hindered by constraints, what could you do?”

And those re-imagining processes go into operational issues. We talk to them about all the security and the government and compliance issues, cost management. So do those nitty gritty details that you have to deal with. And it used to be, those were the big hurdles that customers had, but every day these begin to drop away. Compliance and security are questions that now only take a few minutes to discuss exactly what levels of compliance are required rather than trying to prove to the customer that the cloud is a safe place to do their simulations.

And so we spend a lot more time on the functional issues and that’s really where the value is. The functional issues, like how can I improve my development cycles? How can I make them faster? What tools do I need to be able to derive insights? What visualization or what a machine learning or AI components do I need to put into the workflow? And this type of discussion is a very powerful discussion for companies that really want to take a new approach to high performance computing.

So we get into these discussions, we talk about the functional requirements and then how those map into innovation. And that’s really where you get into the value. And that’s things like the agility or the elasticity. Can you interchange services? You no longer have to predict. It used to be when I got into this business, I worked for a long time at Cray and IBM and, and we were good at predicting what technologies customers might need when they’re making an investment for three to five years and helping them to mitigate the risks associated with those three to five year investments. But those technology innovations, the engineer’s today shouldn’t need to worry about making those bets. They shouldn’t have to make a bet on a technology for three to five years. They should be able to make a bet on their application, on their science, and the best technology available whatever day of the year it is should be at their disposal to be able to solve those problems. And that’s really the powerful flexibility. It’s the agility that cloud provides.

I want to talk through a couple of examples and then I just put some logos up here because they really just represent various types of high performance computing workloads. Whether these are the tightly coupled simulations that Blake was talking about with Boom, where they’re doing these computational fluid dynamics models where they’re looking at the air intake into the jet aircraft engines. That’s a tightly coupled high performance computing model. It requires excellent networking storage and compute technologies. Very balanced.

And we have another example, a customer called Maxar that is doing weather forecasting and their goal is to beat NOAA to the weather forecast every day. So they don’t want to be as accurate necessarily as the National Weather Service, but they want to get to the answer a few hours earlier. And the value in that is that their customers get access to predictions of storms or the impacts of severe weather at a faster pace than the rest of us do, and they can make business decisions based upon that.

There’s companies in the financial services space. We had a guest speaker at our Re-invent Conference in December. Morgan Stanley’s director of HPC stood up with us and talked about how deep engagement on the workflow in their risk calculations was helping them to rethink the agility and elasticity that they can bring to bear. And that’s a company that has very large on premise HVC technologies, but they’re beginning to ask the question, what do I need to do to have more agility, to be able to have more access to technologies as they evolve rather than to make those long term risk-based acquisitions of technology that may or may not pan out. And that includes access to GP use to FPGAs, to the best software, to new services that they evolve, the incorporation of AI and ML into the workflow.

F1 is up here, a Formula 1, because they gather so much data from their cars, they want to be able to do machine learning on the fly while their cars are driving. They want to be able to access that data, do simulations, and then couple that with training models.

In the autonomous vehicle space. It’s really interesting. That’s an area that it’s going to explode. Today most of the autonomous vehicle companies have on the order of 500 cars on the road, maybe 1000, maybe 100. They’re gathering data from those automobiles. So there’s a data ingest issue. They need to be able to move data off of those cars. They need to do that potentially while the cars are driving or do they bring them in and park them and bring the data off of the cars. So there’s an ingest egress question they have to solve. They then have to solve a simulation problem and a training problem.

So the first is they get the data off of the cars, they retrain their models. So they basically take all the new data. They retrain the models. Now you don’t want to feed that model back directly into the cars immediately because you don’t know. Maybe now with the newly trained model, you don’t recognize that dog crossing the road quite as well as you might have before you retrain the model. So they literally send these into simulations, millions of miles of simulated driving. It’s a beautiful embarrassingly parallel problem. It’s literally you are simulating millions of cars driving on random roads and so we can expand that simulation as much as we want. We can run that on 100,000 cores, we can run that on half a million cores and get that done very quickly. Do the analysis, say that the new training set is good, send that new training set out under the cars, and the cars can keep driving. And you can see how much of a bottleneck that could be if you’re not able to get that newly trained model out into the automobiles as quickly as possible.

And so high performance computing and computing in the cloud, that agility and elasticity is really allowing companies across the spectrum to take new approaches and and really be engineering new problems. It’s really opened up the competitiveness of the space.

Blake was talking about Boom and how amazing… Again, I just want to say how amazing it is that these small engineering firms and Boom’s not that small, but they’re certainly competing with the Airbuses of the world, the Boeings of the world, and they need to be able to do these simulations and models in the most cost efficient way, the most agile way possible. They need to be able to take their applications and fit them to whatever architectures are available.

In the old model, in the old on-premise model, we were making predictions about the infrastructure, three to five year predictions and then we were forced to fit our applications to whatever infrastructure we ended up acquiring. So if we made a bet on how much GPU we were going to need or how much CPU or how much FPGA we were going to need, we were basically tied to that bed for a long period of time. And we need to escape that model. We need to enter a model, a world where we’re fitting every single application in our portfolio to the infrastructure that is most optimal.

I’ll give you an example. I went into a major pharmaceutical a few weeks ago, and we go in and we’re talking to this pharmaceutical company. You’d think it would be about drug design or about genomics or about next flow or one of their applications that they’re doing. No. We were having a discussion about CAE, about engineering because they were doing drop testing and device modeling. It wasn’t a big part of their HPC environment. But it was significant. It was an engineering workload that we’re very familiar with, very similar to the types of models that we heard in the previous presentation using third party applications, codes like OpenFOAM or ANSYS, Altair, applications for many of these software vendors, or Siemens who’s talking later with their star CCM application. Those were the list of applications they were running.

And this small engineering team needed to have the best equipment, the best infrastructure to run on. And here they are a part of a pharmaceutical company. Every one of these HBC customers, these companies that are out there, they have hundreds of use cases and applications, and cloud frees them up to be able to fit the application to the best infrastructure. This team doesn’t have to make a prediction about technology. They don’t have to be technology experts. They don’t have to be technologists who are predicting whether GPS or CPS or best. They want to do the science and solve that problem. So we want to live in a world where every application gets run on the most innovative infrastructure.

Rescale who’s a part of this conference is a company that is designed to answer that question and help customers get to that answer of how do I get to the best infrastructure for my application and do that efficiently.

So we’re used to living in a box, and that’s the traditional on premise world. We live in a box, we fit our mindset to that box, and we want to move out of that where every day is different, where tomorrow you can be redesigning engines and the next day you can be doing simulations of structural analysis. And the next day you can incorporate machine learning into your models, and that’s the world where you’ve escaped the bounds of constraints and the engineer is free to engage and and dream about what types of applications they need and about what types of scientific problems they can solve.

I’ll finish with an example where AWS was working with the Fred Hutch Cancer Research Center, and they have a department that’s doing work on microbiomes. Basically the organisms that share our body and and how those can affect cancers that… And they have huge amounts of biological samples. They have huge amounts of customer data, customer, patient data that is really gives them insights that they can start investigating with respect to mapping disease to biological ecosystems that live within our bodies. And being able to look whether there are effects on treatments, whether a particular treatment is effective for one individual and whether that’s influenced by the microbiome that they have versus the treatment for another individual.

And as you can imagine, these are huge data sets where they’re trying to map two disparate pieces of information together and look for insights. And they would do this on their in house platforms, and they estimated initially that this was just too big of a project. It would take seven years to analyze all the data. And working with AWS, they were able to unleash and unconstrain their thinking from the problem of their infrastructure and just think in terms of the problems that they wanted to solve.

And by using the exact infrastructure they needed, mapping their applications to the appropriate technology, they were able to in seven days do the simulations that they had projected would take seven years. That elasticity really unbounds the problem. And they don’t have to buy that infrastructure for five years. They only need it for the seven days and then they ha they’ve solved that part of the problem. And they can come back to it later if they need to, but literally they’re able to unleash and unbound their thinking.

So I think I have a couple of minutes left. I’ll take any questions if you have any.

Question from the audience:

Thanks Barry. Appreciate the talk. All right, here we are. I loved your story at the beginning about plunking down the credit card and just saying here I’ll do it. That’s actually what scares some people about all of my engineers can just go do that. Can you talk about what AWS… Because the question is does that engineer have the budget authority to go buy however many cycles they want on the fly and then get that reimbursed. Can you talk about what tools AWS has for system accounting and cost control when I move that beyond just my internal lab and system accounting? And I know you’ve got tools here, but that’s a scary point.

Barry

Yeah, so no, that’s a great point. And in fact, AWS, the way we set up defaults, we’re usually very cautious about that. So by default most accounts don’t have access to very large quantities of resources because we don’t want customers to get in a situation where they’re overspending. We have a number of tools that really unleashed this power and put it into the controls of the managers, of the infrastructure of the buyer. So we have AWS budgets, which is a set of low-level tools that can be designed in a number of ways and we work to customize those for different customers. We have partners like Ronan, for instance, is a partner that came out of the academic space, and they’ve built on top of several of our services like ParallelCluster. And they built tools that actually can do proactive predictions of cost and then assign accounting codes to the different account IDs that are on AWS and basically put a pause button, put in some controls that put pause button.

So that shows both the basic tools that we provide and then the tools that our partners are building on top of AWS. Because we are a company for builders. So we like to build the low level tools and then unleash partners on how to actually take maximum advantage of that.

I think it’s also important to note that this is going to be an issue anywhere. It’s an issue when you spend for five years on something that may or may not fit your needs three years from now. You don’t see that cost though. It’s gone. And so when you get to year three and you realize that you don’t actually have the right infrastructure and then you start buying additional compute notes of a different architecture or variety, you’re really adding to the total costs that you’ve spent. And so those are hidden costs in the on premise model. But I will say that I think that’s a big concern. AWS puts in a lot of controls to make sure that our customers don’t do something foolish.

Question from the audience:

Thank you for that wonderful talk. It’s all and fine that I can rent a 100 million cores or some outstanding number out in the cloud and run it, but my data’s here. Now a car with 30 sensors has got terabytes of data that’s not going to go anywhere 4G speeds or 5G speeds. How do you bring AWS to me instead of me going to AWS?

Barry:

Yeah and excellent question. In terms of there are a number of approaches. So we actually have our outposts, which we announced, that allows us to have a branch of AWS sitting at a factory or a factory floor or a location where you need low latency access to local data and you need access to AWS resources. So that’s one solution that we heard the cry from customers for that ultra low latency access to data.

We also do these re-imagining projects. We did one with a seismic company where they have data on ships and they need to get the data off the ships. And we really sat down and looked at that workflow and what we can do from the use of satellites with some of the AWS services we have there to the snowballs, which are devices that can store data and then transport that data quickly.

So there’s a whole range of solutions. Maybe I can go into them afterwards, but every customer is going to have a different slant on that. And I think what cloud does is it gives you a set of tools that you can use to approach that problem.

Question from the audience:

Question here, question here. Thank you, Barry, for your talk. For those companies that are sitting on the edge that are waiting to jump into the cloud and the promise of big computes there, and they’re being held back by concerns or worries about whatever, what innovations do you see happening in the next year or two that you anticipate that will push them over the edge?

Barry:

I do think that the power of machine learning and the incorporation of machine learning into HPC workflows is going to drive a class of users over because that workflow is so complex. Buying or purchasing a single infrastructure to solve that workflow is very difficult. We see that in the seismic space where people want to do machine learning on images that they’re seismic images of the subsurface and then have that enhance or accelerate the optimization models that they’re using based on those data sets. We see that in the autonomous driving that I mentioned. We see that in in the life sciences where people want to begin to replace say a QSR or qualitative activity relationship methodologies with machine learning methodologies. So I think that’s going to be a driver. That’s probably the biggest that I see, but there are a whole host. One is, as we make it simpler to use and solutions like the rescale solutions are really valuable there because companies that don’t want to build, they want to use the engineering, that can really push them or give them a lower activation barrier to get into the cloud. And that’s critical.

All right. Thank you very much.