Accelerating the Pace of Scientific Discovery via Cloud HPC

Speaker:

Please welcome to the stage cloud solutions architect at Google Cloud, Jamie Kinney.

Jamie:

I’d like to thank the organizers of the conference and also to be everybody who’s here in person or listening to the livestream for taking the time today to join us to discuss the themes related to big compute. I’d like to use the next 20 minutes or so to focus on the many ways that Google Cloud is helping to accelerate the pace of scientific discovery.

Jamie:

Over the past 10 years that I’ve focused on cloud computing in particular, over 20 years in high technology, I’ve had the good fortune of working with a number of institutions. And what I’d like to do today is share some of the stories of my experiences and the experiences of my peers and how Google is helping researchers, scientists, engineers all over the world ask bigger questions and ask questions that really couldn’t have been addressed before.

Jamie:

What I’d like to maybe first do is tell you a little bit more about myself. I’ve always been interested in science and computers. This is a picture of me back in seventh grade with my friend Jay and we didn’t actually have color photography back then. This is just an image from my hometown newspaper. And the photograph accompanied an article that was describing a new computer lab that had opened up in my middle school. It’s really impossible to overstate the impact that this lab had on me personally and also in my fellow students.

Jamie:

This lab gave us access to computers, which aren’t as powerful as the ones that we now have access to today and they weren’t quite as easy to use but at the time this was really just something that many of us had never experienced before. We used these computers in a number of ways. We used them to create banners using clunky and noisy dot matrix printers. We used them to play games of course. But most importantly, we used fairly rudimentary but easy to use software to become better students.

Jamie:

We used them to study history and economics. I used a program to dissect a virtual frog, learning biology and a number of other topics. So this lab really did help us become better students. And as I thought about this lab a little bit more in the context of today’s talk, I think it really came down to three areas that in particular helped us become better students.

Jamie:

The first was having access to computers that really weren’t available to us previously. The second theme was having the ability to use software that was relatively easy to use for seventh graders. We were able to learn how to program in languages like Basic and Logo and eventually higher level programming languages.

Jamie:

And then thirdly, it created a physical environment, a collaborative learning environment where we could help each other learn these topics together. I’d like to explore how these three themes in particular are allowing Google to help researchers.

Jamie:

So the first of these themes is big compute. We’re at the Big Compute Conference. And the image that we see before us is an image of one of Google’s many data centers. Google has regions all over the world and within these regions, we have many data centers and within those data centers, we have rooms that look like this. Maybe they’re not always lit in a blue light and look quite as pretty as this, but lots and lots of computers at our disposal.

Jamie:

But the really innovative capability that’s been mentioned maybe in a couple of the earlier talks is that cloud computing really fundamentally changes the game. It gives you access to a scale of compute as well as access to the very latest processors, hardware accelerators like graphical processing units, tensor processing units or TPUs, SSDs, incredibly fast networks and other capabilities but at a scale and also delivered under a model that’s available on a pay as you go basis.

Jamie:

And so what this means is that anybody can get access to thousands of computers for one hour for the same price as one computer for a thousand hours. And because it’s at the same price, this really is the fundamental game changer. It allows us to get answers to questions that are embarrassingly parallel or we can take advantage of this model in literally one 1000th of the time at no incremental cost.

Jamie:

I’d like you to think about this for a moment and consider some of the types of questions that you would be able to answer significantly faster and what you would do with that time that otherwise you would have spent waiting for these resources.

Jamie:

Now, the second area is related to easy to use software. The image that we see behind me is the web console for one of my favorite Google Cloud services, the AutoML Vision. AutoML is a technique of machine learning where you use a range of models, testing your data sets to see which of these models might be the best fit. In this case with the AutoML Vision API, we’re using an image net approach to have a base model upon which we overlay a final model that’s based on the data that you’ve uploaded to the service.

Jamie:

This is incredibly powerful because for people like me who were actually trained in Marine biology and not computer science, in literally just a few minutes, I’m able to upload a dataset to the cloud, provide a label, you have a CSV that tells me which of these files in this case, our one breed of dog versus another, maybe a St. Bernard versus a Cocker Spaniel. The service will automatically divide that up and test in training datasets and then run through all of the different models and see which one’s the best fit for you.

Jamie:

And just a couple of minutes later, you have a web API that you can then embed into your applications using this model. You don’t have to program a single line of TensorFlow or PyTorch. You don’t have to be a machine learning expert but you reap all of those benefits.

Jamie:

Now I did this myself using an image of one of my dogs. This is Poppy, she’s a labradoodle. And as you can see the service correctly identified her dog breed. Now this is a fairly minor accomplishment, being able to determine that my dog is in fact a labradoodle but the thing that’s really compelling about this to me is that I and anybody else in the world now have access to some of the world’s most powerful machine learning technologies.

Jamie:

The second theme and the way that describes the way that Google is helping to accelerate the pace of discovery is by making software, incredibly powerful software, be it batch processing or machine learning or data analysis and visualization tools accessible and available to anybody.

Jamie:

Now, as many of you know, research is really never done in a vacuum. Individual researchers rarely tackle a problem on their own. Frequently they will collaborate with others, either in their own institution, maybe at a university or a national laboratory or maybe with some of their colleagues that they met at a conference.

Jamie:

They might live on the other side of the country or they might live on the other side of the planet. Now it’s not always easy to physically get together and historically these research collaborations have struggled with questions like, “Whose data center are we going to use to store this data? Whose computers are we going to use? And can I actually get access to your network or do we have to go talk to the network admins to try to poke some holes in the firewall?”

Jamie:

These types of questions would slow down scientific collaborations and cause researchers to maybe not be able to move as quickly as they would like to otherwise. Google provides a global world class network. We provide easy utilities for securely managing your resources, your projects and your organizations within Google Cloud infrastructure and using your identities across all of the collaborating institutions and researchers to seamlessly and securely provide access to those resources that each of you need to be able to leverage in answering questions.

Jamie:

You can also do things like have a shared data storage environment, but have each institution providing their own compute resources for which they’re financially responsible instead of having to figure out how to share funding that’s coming from different grants.

Jamie:

So creating this environment that’s akin to that physical collaborative computer lab that I had in middle school, but doing this at a global scale and giving you access to world-class compute network and storage resources helps researchers more easily collaborate with each other.

Jamie:

We’ve now talked about these three basic themes of the ways that Google’s helping to accelerate the pace of discovery. I’d like you to take a moment now and think about your first encounter with science or engineering is something that was inspiring to you.

Jamie:

For me, it was the time when I was I think in first grade, when my parents took me… I grew up in Ohio. They took me to the NASA Glenn Research Center , I think at the time it was called the NASA Lewis Research Center in Cleveland. And we went into an area where researchers were conducting experiments that children and other visitors could participate in. And one researcher had this brick made out of some kind of light material and they were blasting it with a propane torch and it started glowing red hot and they handed it to me and I was expecting to be burned, but of course I wasn’t and it was cool at the touch.

Jamie:

And this was obviously one of the heat shields that would be used for the space shuttle program, which was really just getting started at the time. And this experience blew my mind. I had just seen how technology was being used to develop a type of material that hadn’t existed before. And so leaving that visit with an arm load of images, of photos and papers that the researchers had given me, I wrote my first report on the space shuttle.

Jamie:

Now I’m fortunate because in my day job, I now get to work with these same institutions. I get to work with NASA for example. This is an image of myself in the clean room at NASA Jet Propulsion Laboratory where satellites going back to Voyager and the latest Mars Rover, Mars 2020 have been constructed.

Jamie:

And so I’d like to now talk about a way that NASA is using Google Cloud. NASA has a number of missions and mission directorates that they focus on. One of these and probably one of the most important is I’m trying to understand does life exist outside of our planet and outside of our solar system? And so the way that you try to answer this question is first trying to find those planets where life maybe looking something like what we’ve seen on earth or maybe looking a little bit different, that could exist.

Jamie:

So finding those planets is the first approach. And the way that you do that of course, is you have observatories either in space or on the ground that are looking at stars over time. And they’re looking for dips in light that occur as planets that are in orbit around these stars or exoplanets pass between the star and that space telescope or that ground-based observatory.

Jamie:

And you monitor these dips and luminosity over time. And you also pay close attention to any shifts in color that occur. And with this information, you can tell the size of the planet, the temperature. You can even start to infer the atmospheric composition if a planet does have an atmosphere. And with this information, we can then target our future investigations on those planets that are most likely to potentially be habitable to lifeforms.

Jamie:

Working with NASA, NASA had a challenge. The data that comes from missions such as Kepler and TESS and even the Hubble Space Telescope, and soon the James Webb Space Telescope generate tens of terabytes of data each month. Now, while that might not sound like a lot of data, terabyte isn’t what it used to be, it would take typically two to three days to analyze just one of these light curves using the best algorithms that were available at the time.

Jamie:

And this produced results that were about 93-94% accurate. And so NASA’s Frontier Development Laboratory reached out to Google to see if there was a way that we could help them maybe analyze this data a bit faster and maybe even improve the accuracy of these models.

Jamie:

So working together, what we did was actually helped NASA use that same service that I used to identify my dog is a labradoodle to analyze these light curves. We used the AutoML Vision service to create a model that instead of taking two or three days now took less than a second to process one of these light curves.

Jamie:

Now, if we think about the scale of compute resources that Google has and the ease of using our software, we’ve now combined two of these themes to allow NASA to be able to analyze all of this data effectively immediately.

Jamie:

Now previously, it would have taken years to go through the backlog of light curve data that’s already been collected. And you think about the amount of time that’s going to be required to analyze data from future missions like the James Webb Space Telescope, this now allows NASA to immediately jump to the point where they’re able to start thinking about their next mission, potentially accelerating by years the design of that next mission allowing them to start to build future space craft, think about the science, the types of investigations that they’d like to make and hopefully get us that much closer to answering the question of whether or not life exists outside of Earth.

Jamie:

I’d like to tell a different story. August 23rd, 1992, two things about this date are really important to me. First of all, this was the day that I arrived in Coral Gables, Florida. I had just driven for a week and a half with my family in the minivan from Ohio down to Florida, Southeastern Florida. It was also the day that Hurricane Andrew, the Category 5 hurricane hits Southeastern Florida.

Jamie:

The eye of the storm actually passed right over the University of Miami, where I was about to become a biological oceanography student. The building that you see up in the corner was right across the street from my dorm. Now my dorm was a concrete bunker with steel hurricane shutters. My parents actually spent the night with me there along with many other parents of new students and so we were safe.

Jamie:

The hurricane center was broadcasting throughout the storm and you can see on top of the building, there’s a radar down there. And they were using this to track the storm, both its intensity, they were using this tool to actually detect the tornadoes that were starting to hit our campus and other areas like home study but even harder. But around 2:00 in the morning, I think it was, the meteorologist apologized and they wouldn’t be able to give us any more updates because that radar dome had just blown off the roof of the building.

Jamie:

The next day we saw the immense damage that the storm had left. We saw cars that were stacked on top of each other. There were trees, of course, that were uprooted and the city was really in a true disaster state. My parents’ vehicle wasn’t damaged. So they were able to start slowly working their way out of Southeastern Florida and start heading North again.

Jamie:

I stayed at the university hoping to begin studies. Unfortunately, the university ran out of water. We ran out of food. And so they started sending students back. I ended up greeting my parents at the door when they ultimately got home and then was able to resume classes a little bit later.

Jamie:

But the reason I shared the story of this storm, this was actually the most significant natural disaster in US history at the time. It caused over $26.5 billion of damage is because these storms are unfortunately happening with greater regularity, greater frequency and they’re becoming even more severe.

Jamie:

And so Google worked with Clemson University researchers who had really two goals in mind. One of their goals was to really just understand how they could more effectively take advantage of large scale big compute resources from a Google Cloud.

Jamie:

And secondly, they wanted to tackle a specific problem that would be able to benefit our world. And one of these was how do we help people evacuate in advance of hurricanes and flooding and other severe weather events so that one, more people actually do evacuated and they can, two, get out of these zones much faster.

Jamie:

And so we worked with Clemson to help them provision over 2 million cores of compute on Google Cloud to analyze millions of hours of traffic data so that they could simulate different approaches to evacuating from zones that were about to be impacted by hurricanes and flooding and come up with better recommendations for public safety agencies that were we’re directing these evacuations.

Jamie:

Now, the really interesting thing about this is that Clemson was able to spin up these compute resources across hundreds of thousands of virtual machines ultimately launching over 2.1 million cores of compute, actually run the simulation process all of these videos come up with tools and techniques that they could then use to help us evacuate more quickly from these types of storms in the future and tear everything down in literally just a few hours.

Jamie:

Think about the immense capability that this now offers to tackle problems of this scope and magnitude. You’re using getting access to millions of core hours. So it was really just not possible. This is a question that probably just couldn’t have been asked before the advent of cloud computing.

Jamie:

Now, as I mentioned before, we all know that the earth is getting a bit warmer and these types of storms are going to be happening more frequently and one of the ways that we know that this is the case is through the use of coupled climate models. These coupled models actually integrate a number of lower level models.

Jamie:

They look at models that try to predict ice coverage. They look at that Cloud Cover, Sea Surface temperature, the temperature of the earth, all kinds of factors that collectively will help us better understand the effects of climate change so that we can mitigate and adapt to these.

Jamie:

And these types of models rely on massive amounts of input data. By massive, typically petabytes or tens of petabytes of input data, obviously large scale big compute resources and the models themselves produce tens of petabytes of output, which then needs to be analyzed so that researchers can make recommendations and help us to understand the direction that this is going.

Jamie:

One way that Google is helping us better understand the impacts of climate change is by hosting a number of public data sets. One of the most commonly used coupled models is the CMIP6 model. And on Google, we have a public data set that we’d launched just in the past month that hosts over a hundred terabytes of data of the ultimately 20 petabytes of CMIP6 model output data so that researchers don’t have to physically copy of this data to their own institutions.

Jamie:

They don’t even have to create a Google Cloud project and copy of the data themselves or even put that bill, we’re hosting this at our expense. And we’re hosting this both in our object store and our big query data warehousing service with a commitment to bring more and more of this data online as more researchers begin to use it.

Jamie:

Now it’s one thing to have that data and have access to the large scale compute resources. But if we think back to that computer lab that I had in middle school, the thing that really made it useful to us was having easy to use software. And that’s why we have partnered with Pangeo to create tutorials and other resources that help climate researchers better understand how to work with this data.

Jamie:

This article, CMP6 In The Cloud Five ways describes first, how you would work with this data, how you would typically download this data to your own laptop or to your own data center. Secondly, showing you how you can do exactly the same thing in the cloud. It’s a little bit faster because we have a faster network connections and you’re not throttled based on the size of datasets. If you’re working with relatively small data, it’s about a wash. You get the same results.

Jamie:

But where things really get interesting is when you start to follow the tutorials on launching clusters using Dask and other scheduling mechanisms to really start to analyze this data at scale, ultimately allowing you to ask the class of questions that are much more difficult, if not possible to ask that with traditionally available compute resources.

Jamie:

I urge you, if this is a question or a topic that’s of interest to you to take a look at this article. There’s a great set of lessons as well as sample code written in both Julia and Python.

Jamie:

Okay. I’d like to now talk about a different topic. And this is how we help know and tackle that kind of an unusual challenge. So humpback whales, they were listed as endangered species back in the ’70s but fortunately due to some changing policies they’re actually now doing quite well around the world in most of their populations. Noah has the responsibility of monitoring the success of this recovery.

Jamie:

And one of the ways that they go about this is by using under sea listening stations or hydrophones that are recording hundreds, actually massive amounts of data, hundreds of terabytes of audio data that allows researchers to track the position of these whales. Now, humpbacks are interesting because they each have their own dialect and this dialect changes over time for individual whales and the type of audio that comes from an individual, the type of sounds that they make will appear different based on the temperature of the ocean or the depth at which they’re vocalizing. Let’s listen to actually to one of these recordings for a moment.

Jamie:

Now, if we look at this, we see one way of visualizing that data. The way that Noah was trying to approach us was by thinking about, “Could we listen to this data and try to identify individual whales, maybe use some form of crowdsourcing to tackle this data?” But there was just too much audio data. It would literally take 20 years just to process by human ear, the amount of data that had been recorded in the preceding years.

Jamie:

And so Noah turned to Google and said, “Hey, is there a better way, maybe we could use some of these machine learning services that you’ve been telling us about?” What we did was we actually came up with way to visualize the hydrophone data and turn it into an image that once again, we could analyze with the Vision API. And in doing so, we were able to help Noah identify a technique that’s now incredibly efficient and was able to process, I think, hundreds of terabytes of humpback whale acoustic data in literally just a couple of days.

Jamie:

And so now they have a technique that could not only be used to analyze data that had been collected previously, but the same model can now be exported to a standalone file that could be deployed using one of our edge TPUs, one of our disconnected machine learning hardware accelerator chips, so that it could then be embedded in underwater devices that might not have a connection to the internet and therefore might not be able to take advantage of cloud services delivered over web services APIs.

Jamie:

I live in Seattle and I’m really fortunate in that I get to see some pretty interesting wildlife. I like to do photography. This isn’t my picture. I wish that it were but literally just a couple of days ago on Saturday, we had a pot of orcas that passed less than a half a mile away from my house. It’s a fairly common occurrence to see orcas from the ferries or even from the beach.

Jamie:

Now, orcas unlike humpback whales, are actually not doing so well. Especially the Puget Sound resident orcas, which feed on salmon and not other marine mammals they’ve actually been in decline. There’s only about 73 of these left in the Salish Sea of which Puget Sound is as part of. And Noah is interested in trying to help understand the effects of pollution in streams and also in Puget Sound and other bodies of water and the impact that that has on these populations and being able to direct recovery efforts more precisely.

Jamie:

And so they’ve actually now been able to use that same technique of using under sea hydrophones to monitor the location of a specific whale so that if there’s an oil spill or if there’s an exercise that’s been planned or if there’s specific marine activity, they can be re-diverted so that it doesn’t have a negative impact on orcas. So we’re hoping that this will help Noah drive a similar recovery for a Puget Sound resident orca population.

Jamie:

One more story that I’d like to share actually comes from 2015. If you remember the news in 2015, it wasn’t too dissimilar from the news that we’re hearing today. There was a outbreak of a disease called Zika and Zika was actually very similar to the novel coronavirus in a number of ways. About 20% of the patients who were infected with the Zika virus were asymptomatic.

Jamie:

We had restrictions on air travel. There were talks of larger scale quarantines and the Olympics were to be hosted in Brazil that year. And there was even a discussion about potentially canceling the Olympics that year. Fortunately, we were able to control this outbreak and one of the techniques and one of the tools that was used to do this was the use of big data analytics and large scale simulations.

Jamie:

And one of the organizations that helped with this effort was Northeastern University and actually working with the Fred Hutchinson Research Center in Seattle that was mentioned in the previous talk. And researchers at Northeastern University in the MOBS lab used Google Cloud to run millions of what if simulations that were looking at the various factors that were contributing to the outbreak of the Zika virus, which is of course a mosquito borne disease.

Jamie:

Looking at the environmental conditions, weather and other factors that might determine how many mosquitoes might be thriving in a given area down to the County level in States like Florida and other parts of the Southeast. Looking at socioeconomic factors that might contribute or might help us better understand which human populations might be more at risk of mosquito exposure. And also looking at the rate of travel and how that was changing.

Jamie:

And so by running some of these models and simulations and producing visualization tools, which were then shared with other researchers around the world, using Google Cloud Services, they were able to help public health officials better direct limited resources so that we could focus on those areas that were most at risk of the Zika outbreaks.

Jamie:

Now, these techniques were using big compute resources. They were using software services from Google Cloud and ultimately they were able to direct these recovery efforts at the County level, if not more granular levels. And the same organization, the MOBS lab at Northeastern university is now tackling the problem of the novel coronavirus. I don’t have a lot of details that I can share here about use of Google Cloud, but I would encourage you to take a look at their website, links below and over time, we hope to have more information to share with you. They’re a little bit busy right now.

Jamie:

So in conclusion, I’d just like each of you to think about the ways that you might be able to use the power of cloud computing to tackle questions that you might not have thought possible before, to think about the ways that cloud could impact your world through the power of science and engineering and research. And also to think about these three themes that I’ve discussed today.

Jamie:

First, the power of big compute, the ability to access effectively limitless compute resources using the latest hardware accelerators and other technologies. Secondly, the access of easy to use machine learning services and other software. And third, what’s possible when it’s easy for you to collaborate with people next door to you, as well as people on the other side of the planet. And so with that, I’d like to thank you for your time and I’ll be happy to take your questions.

Jamie:

There are many traditional high performance computing customers that have been doing things on premise here in the audience and wondering, should I go to the cloud, should I jump in? And I wonder you could talk a little bit about Google’s investments recently to help those kinds of people consider moving their compute and storage resources to the cloud.

Jamie:

Sure. Great question. And thank you for that. Google has been making a number of investments to make traditional high performance computing. When I think of high performance computing, I think of both embarrassingly parallel computation, you have many of the types of examples that I’ve discussed previously as well as more tightly coupled applications that rely on things like low latency networking and at other hardware accelerators, for example. Google has been innovating across the board to make these types of workloads easier.

Jamie:

If you’ve been paying close attention to some of the advancements that we’ve made on the networking layer, we’re building new network accelerators that are helping to produce the latency of node to node communication, making MPI type applications ran far more efficiently.

Jamie:

We’ve continued to build out our high speed network and build peering relationships with the national research and engineering networks that make it easier to move petabytes of data between traditional data centers and the cloud so that researchers that might be focusing on fields like high energy physics don’t need to only run their computation in one location or the other. They can more easily distribute those workloads across both types of environments.

Jamie:

We’re working with each of the major scheduling software companies to build integration. So if you’re using something like Slurm or LSF, they have native plugins that can automatically provision and deprovision compute resources. Of course, rescale makes all of this easy and also brings fantastic relationships with the software vendors that produce these traditional HPC tools and make it available both the software and the hardware available on a utility basis.

Jamie:

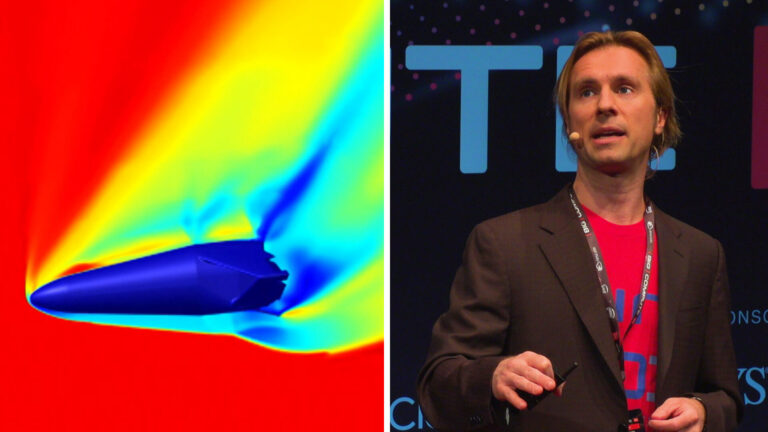

And then continuing to innovate in the hardware accelerators that are used, not just for machine learning applications, but also to support things like computational fluid dynamics workloads. Those are just a few of the ways but happy to talk about more of these later if you’d like.

Jamie:

I was curious if Google had been thinking about the application of low latency satellites. I think there are a few major constellations coming online in the next five years or so, like SpaceX. I think he was an investor in SpaceX and of course Amazon has Project Kuiper.

Jamie:

Is there any application to extremely low latency connections throughout the entire globe?

Jamie:

Yeah, it’s a good question. And not one that I can speak about publicly, mostly because I haven’t been directly involved in any of those projects. I will say that Google has built out a pretty amazing global network. The same network, actually, that you would use to watch YouTube videos from anywhere in the world with incredibly low latency and local caching means that we actually have network points of presence pretty much anywhere in the world.

Jamie:

And so that’s part of the infrastructure that you would need to support the types of workloads that you were describing. I don’t have any specific services that I can talk about publicly at this time, but I’m happy to talk with you in more detail of this if this is something that you’d like to bring to the cloud.

Jamie:

Well, thanks again for your time and I hope you have a great day.